Migrating the SQL DemoDB to PostgresSQL

The installation media for Content Manager 9.2 comes with a demonstration dataset that can be used for testing and training. Although I think the data within it is junk, it's been with the product for so long that I can't help but to continue using it. To mount the dataset you have to restore a backup file onto a SQL Server and then register it within the Enterprise Studio.

SQL Server is a bit too expensive for my testing purposes, so I need to get this dataset into PostgresSQL. In this post I'll show how I accomplished this. The same approach could be taken for any migration between SQL and PostgresSQL.

I'm starting this post having already restored the DemoDB onto a SQL Server:

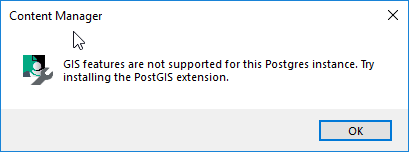

If you look at the connection details for the highlighted dataset, you'll see that GIS is enabled for this dataset. My target environment will not support GIS. This inhibits my ability to use the migrate feature when creating my new dataset. If I tried to migrate it directly to my target environment I would receive the error message shown below.

Even if I try to migrate from SQL to SQL, I can't migrate unless GIS is retained...

To use the migration feature I need to first have a dataset that does not support GIS. I'll use the export feature of the GIS enabled dataset to give me something I can work with. Then I'll import that into a blank dataset without GIS enabled.

The first export will be to SQL Server, but without GIS enabled. When prompted I just need to provide a location for the exported script & data.

Once completed I then created a new dataset. This dataset was not initialized with any data, nor was GIS enabled. The screenshot below details the important dataset properties to be configured during creation.

After it was created I can see both datasets within the Enterprise Studio, as shown below.

Next I switched over to SQL Server Management Studio and opened the script generated as part of the export of the DemoDB. I then executed the script within the database used for the newly created DemoDB No GIS. This populates the empty dataset with all of the data from the original DemoDB. I will lose all of the GIS data, but that's ok with me.

Now I can create a new dataset on my workgroup server. During it's creation I must specify a bulk loading path. It's used in the same manner as the export process used in the first few steps. The migration actually first performs an export and then imports those files, just like I did in SQL Server.

On the last step of the creation wizard I can select my DemoDB No GIS dataset, as shown below.

Now the Enterprise Studio shows me all three datasets.