Migrating On-premise PostgresSQL to Cloud SQL

In this post I'll cover what it takes to move an on-premise PostgresSQL database into a Cloud SQL instance. I've already migrated a workgroup server, but I could have just as easily kept my workgroup server on-premise. At the end of this I'll have all of my Content Manager infrastructure within a secure, private, fully-managed cloud infrastructure.

The first task is to generate a SQL dump from PostgresSQL but with no extension related statements. I'll need to install GnuWin so that I can leverage the sed command. I executed the pg_dump command, piped the results through sed and then to disk (as shown below).

Next I created a bucket named "postgressql-backup" and uploaded the file. Although I could have done this via the command line, I preferred to use the cloud console.

Next, I go over to my Cloud SQL instance and select import.

Then I can click Browse, navigate to the file in the bucket, and select it.

Lastly, I need to select the target database within my instance and then click Import.

After clicking Import, I returned to the Cloud SQL overview dashboard to monitor the progress. Eventually I'll see a notification once the import has completed.

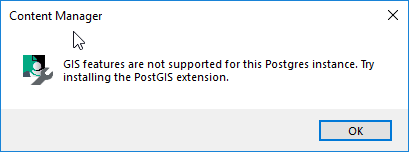

Now I connect to my Content Manager Workgroup Server and launch the Enterprise Studio. Here I am registering a dataset because I've also decommissioned the original workgroup server. Alternatively, I could have modified the connection string of an existing dataset. I used all of the settings from the Cloud SQL instance when configuring the connection string, as shown below.

I completed the wizard and was shown a message indicting success.

A quick check of the client shows me everything worked.

Sweet! Now my DemoDB dataset fully resides within the cloud!