ELK your CM audit logs

In this post I'll show how I've leveraged Elasticsearch, Kibana, and Filebeats to achieve a dashboard based on my CM audit logs. I've created an ubuntu server where I've installed all of the ELK components (note if you don't have access to one, you don't need one to play... just install it all locally). This segregates it from Content Manager and let's me work with it independently.

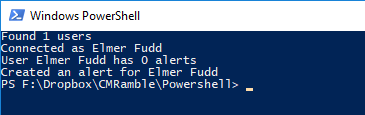

First I installed Filebeat onto the server generating my audit logs. I configured it to search within the Audit Log output folder and to ignore the first line of each file. You can see this configuration below.

I completed the configuration by directing the content to Elasticsearch directly. Then I started filebeat and let it run.

When I inspect the index I can see it's stuffed each line of the file into a message column.

This doesn't help me. I need to break this line of data into separate fields. The old audit log viewer shows me the order of each column and what data is contained inside. I used this to define a regular expression to extract the properties.

I used a grok pipeline processor to implement the regular expression, transform some of the values, and then remove the message field (so that it doesn't confuse things later). The processor I came up with is as follows:

{

"description" : "Convert Content Manager Offline Audit Log data to indexed data",

"processors" : [

{

"grok": {

"field": "message",

"patterns": [ "%{DATA:EventDescription}\t%{DATA:EventTime}\t%{DATA:User}\t%{DATA:EventObject}\t%{DATA:EventComputer}\t%{NUMBER:UserUri}\t%{DATA:TimeSource}\t%{DATA:TimeServer}\t%{DATA:Owner}\t%{DATA:RelatedItem}\t%{DATA:Comment}\t%{DATA:ExtraDetails}\t%{NUMBER:EventId}\t%{NUMBER:ObjectId}\t%{NUMBER:ObjectUri}\t%{NUMBER:RelatedId}\t%{NUMBER:RelatedUri}\t%{DATA:ServerIp}\t%{DATA:ClientIp}$" ]

}

},

{

"convert": {

"field" : "UserUri",

"type": "integer"

}

},

{

"convert": {

"field" : "ObjectId",

"type": "integer"

}

},

{

"convert": {

"field" : "ObjectUri",

"type": "integer"

}

},

{

"convert": {

"field" : "RelatedId",

"type": "integer"

}

},

{

"convert": {

"field" : "RelatedUri",

"type": "integer"

}

},

{

"remove": {

"field" : "message"

}

}],

"on_failure" : [

{

"set" : {

"field" : "error",

"value" : "{{ _ingest.on_failure_message }}"

}

}

]

}Now when I test the pipeline I can see that the audit log file data is being parsed correctly. When testing the pipeline you submit the processor and some sample data. It then returns the results of the processor.

Result of posting to the pipeline simulator

As shown below, I used postman to submit the validated pipeline to elasticsearch and name it "cm-audit"...

Now I need to go back to my Content Manager server and update the configuration of Filebeats. Here I'll need to direct the output into the newly created pipeline. I do this by adding a pipeline definition to the Elasticsearch output options.

Next I stop Filebeat and delete the local registry file in ProgramData (this let's me re-process the audit log files).

Before starting it back up though, I need to delete the existing index.

Delete action in the Elasticsearch Head

Now I can start it back up and let it populate elasticsearch. If I check the index via kibana I can see my custom fields for the audit logs are there.

Last step, create some visualizations and then place onto a dashboard. In my instance I've also setup winlogbeats (which I'll do another post on some other day), so I have lots of information I can use in my dashboards. For a quick example I'll show the breakdown of event types and users.