Enriching Record Metadata via the Google Vision API

Many times the title of the uploaded file doesn't convey any real information. We often ask users to supply additional terms, but we can also use machine learning models to automatically tag records. This enhances the user's experience and provides more opportunities for search.

Automatically generated keywords, provided by the Vision API

In the rest of the post I'll show how to build this plugin and integrate it with the Google Vision Api...

First things first, I created a solution within Visual Studio that contains one class library. The library contains one class named Addin, which is derived from the TrimEventProcessorAddIn base class. This is the minimum needed to be considered an "Event Processor Addin".

using HP.HPTRIM.SDK; namespace CMRamble.EventProcessor.VisionApi { public class Addin : TrimEventProcessorAddIn { public override void ProcessEvent(Database db, TrimEvent evt) { } } }

Next I'll add a class library project with a skeleton method named AttachVisionLabelsAsTerms. This method will be invoked by the Event Processor and will result in keywords being attached for a given record. To do so it will call upon the Google Vision Api. The event processor itself doesn't know anything about the Google Vision Api.

using HP.HPTRIM.SDK; namespace CMRamble.VisionApi { public static class RecordController { public static void AttachVisionLabelsAsTerms(Record rec) { } } }

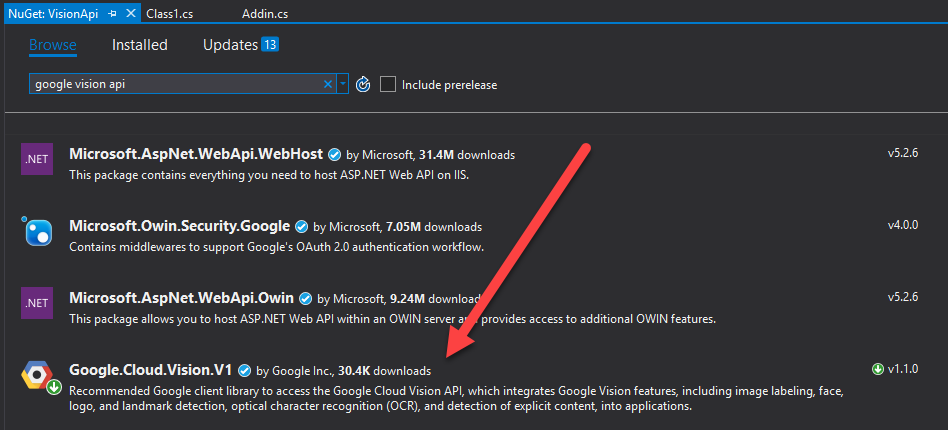

Before I can work with the Google Vision Api, I have to import the namespace via the NuGet package manager.

The online documentation provides this sample code that invokes the Api:

var image = Image.FromFile(filePath); var client = ImageAnnotatorClient.Create(); var response = client.DetectLabels(image); foreach (var annotation in response) { if (annotation.Description != null) Console.WriteLine(annotation.Description); }

I'll drop this into a new static method in my VisionApi class library. To re-use the sample code I'll need to pass the file path into the method call and then return a list of labels. I'll mark the method private so that it can't be directly called from the Event Processor Addin.

private static List<string> InvokeDetectLabels(string filePath) { List<string> labels = new List<string>(); var image = Image.FromFile(filePath); var client = ImageAnnotatorClient.Create(); var response = client.DetectLabels(image); foreach (var annotation in response) { if (annotation.Description != null) labels.Add(annotation.Description); } return labels; }

Now I can go back to my record controller and build-out the logic. I'll need to extract the record to disk, invoke the new InvokeDetectLabels method, and work with the results. Ultimately I should include error handling and logging, but for now this is sufficient.

public static void AttachVisionLabelsAsTerms(Record rec) { // formulate local path names string fileName = $"{rec.Uri}.{rec.Extension}"; string fileDirectory = $"{System.IO.Path.GetTempPath()}\\visionApi"; string filePath = $"{fileDirectory}\\{fileName}"; // create storage location on disk if (!System.IO.Directory.Exists(fileDirectory)) System.IO.Directory.CreateDirectory(fileDirectory); // extract the file if (!System.IO.File.Exists(filePath) ) rec.GetDocument(filePath, false, "GoogleVisionApi", filePath); // get the labels List<string> labels = InvokeDetectLabels(filePath); // process the labels foreach( var label in labels ) { AttachTerm(rec, label); } // clean-up my mess if (System.IO.File.Exists(filePath)) try { System.IO.File.Delete(filePath); } catch ( Exception ex ) { } }

I'll also need to create a new method named "AttachTerm". This method will take the label provided by google and attach a keyword (thesaurus term) for each. If the term does not yet exist then it will create it.

private static void AttachTerm(Record rec, string label) { // if record does not already contain keyword if ( !rec.Keywords.Contains(label) ) { // fetch the keyword Keyword keyword = null; try { keyword = new HP.HPTRIM.SDK.Keyword(rec.Database, label); } catch ( Exception ex ) { } if (keyword == null) { // when it doesn't exist, create it keyword = new Keyword(rec.Database); keyword.Name = label; keyword.Save(); } // attach it rec.AttachKeyword(keyword); rec.Save(); } }

Almost there! Last step is to go back to the event processor add in and update it to use the record controller. I'll also need to ensure I'm only calling the Vision API for supported image types and in certain circumstances. After making those changes I'm left with the code shown below.

using System; using HP.HPTRIM.SDK; using CMRamble.VisionApi; namespace CMRamble.EventProcessor.VisionApi { public class Addin : TrimEventProcessorAddIn { public const string supportedExtensions = "png,jpg,jpeg,bmp"; public override void ProcessEvent(Database db, TrimEvent evt) { switch (evt.EventType) { case Events.DocAttached: case Events.DocReplaced: if ( evt.RelatedObjectType == BaseObjectTypes.Record ) { InvokeVisionApi(new Record(db, evt.RelatedObjectUri)); } break; default: break; } } private void InvokeVisionApi(Record record) { if ( supportedExtensions.Contains(record.Extension.ToLower()) ) { RecordController.AttachVisionLabelsAsTerms(record); } } } }

Next I copied the compiled solution onto the workgroup server and registered the add-in via the Enterprise Studio.

Before I can test it though, I'll need to create a service account within google. Once created I'll download the API key as a json file and place it onto the server.

The API requires that the path to the json file be referenced within an environment variable. The file can be placed anywhere on the server that is accessible by the CM service account. This is done within the system properties contained in the control panel.

Woot woot! I'm ready to test. I should now be able to drop an image into the system and see some results! I'll use the same image as provided within the documentation, so that I can ensure similar results.

Sweet! Now I don't need to make users pick terms.... let the cloud do it for me!